Telephone scams have been round for some time, however latest advances in AI expertise are making it simpler for dangerous actors to persuade folks they’ve kidnapped their family members.

Fraudsters use AI to repeat the voices of individuals’s members of the family bogus kidnapping schemes who persuade victims to ship cash to the scammers in alternate for the security of their family members.

The scheme just lately focused two victims in a Washington state college district.

Highline Public Colleges in Burien, Wash., issued a discover on Sept. 25 warning group members that the 2 people had been focused by “scammers falsely claiming to have kidnapped a member of the family.”

“The cheaters performed [AI-generated] audio recording of the member of the family, then demanded a ransom,” the varsity district wrote. “The Federal Bureau of Investigation (FBI) has seen a rise in these scams throughout the nation, with a selected concentrate on households who communicate a language apart from English.”

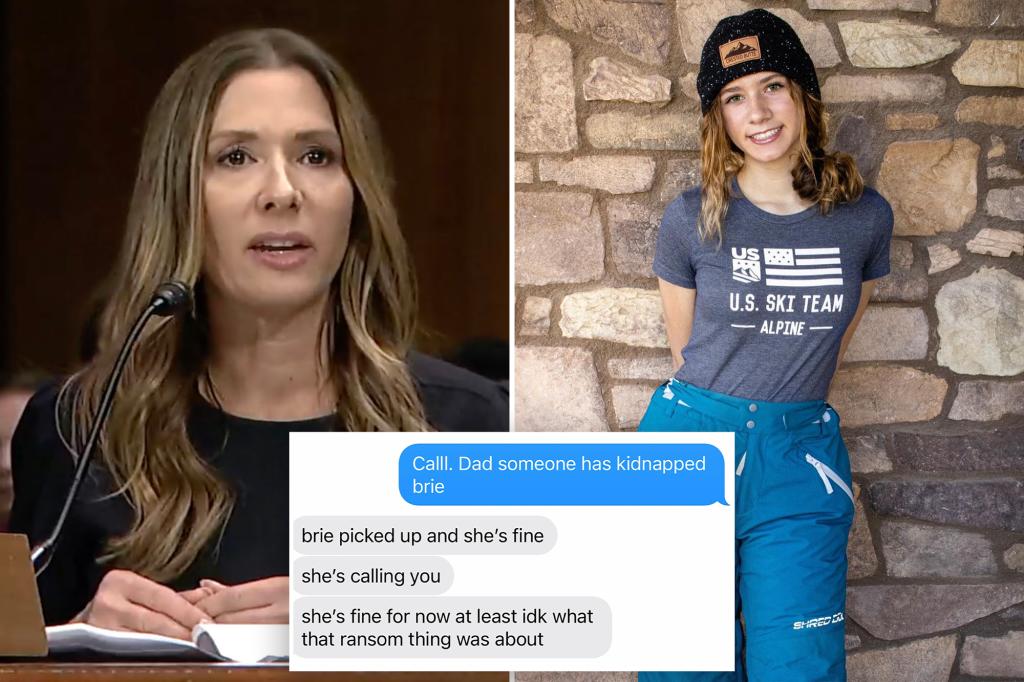

In June, Arizona mom Jennifer DeStefano testified earlier than Congress about how the scammers used AI to trick her into believing her daughter had been kidnapped in a conspiracy to extort $1 million. She started by explaining her choice to reply a name from an unknown quantity on Friday afternoon.

“I answered the cellphone with ‘Hey.’ On the opposite facet was our daughter, Brianna, sobbing and crying, saying ‘Mommy,’” DeStefano wrote in his testimony to Congress. “I did not suppose something of it at first. … I casually requested her what occurred as I had her on speakerphone stroll throughout the parking zone to fulfill her sister. Brianna adopted up with, ‘Mother, I tousled,’ with extra crying and sobbing.”

On the time, DeStefano had no thought {that a} dangerous actor existed uses AI to copy her daughter’s voice on the opposite line.

She then heard a person’s voice on the opposite finish yelling urgently at her daughter as Briana – at the least her voice – continued to scream that the dangerous males had “taken” her.

“A threatening and vulgar man took the decision. “Pay attention right here I acquired your daughter inform anyone name the cops I am gonna pump her abdomen full of medication I am gonna take care of her let her go [M]exico and you may by no means see her once more!’ all of the whereas, Briana was within the background, desperately pleading, “Mother, assist me!” DeStefano wrote.

The boys demanded a $1 million ransom in alternate for Brianna’s security whereas DeStefano tried to contact pals, household and police to assist her discover her daughter. When DeStefano instructed the scammers he did not have $1 million, they demanded $50,000 in money to absorb particular person.

Whereas these negotiations had been happening, DeStefano’s husband discovered Briana protected at residence in her mattress, unaware of the fraud involving her personal voice.

“How can she be protected together with her father and nonetheless be within the possession of kidnappers? It did not make any sense. I wanted to speak to Bree,” DeStefano wrote. “I could not imagine she was protected till I heard her voice say she was. I requested her again and again if it was actually her, if she was actually protected, once more, was this actually Bree? Are you certain you are actually protected?! My thoughts was spinning. I am unable to keep in mind what number of occasions I wanted reassurance, however after I lastly realized the truth that she was protected, I used to be livid.

De Stefano concluded his testimony by noting how synthetic intelligence makes it troublesome for folks to belief their very own eyes and listen to with their very own ears — particularly in digital settings or over the cellphone.

Unhealthy actors have focused victims in america and around the globe. They can copy an individual’s voice by means of two primary techniques: first, by accumulating knowledge from an individual’s voice in the event that they reply an unknown name from scammers, who will then use AI to control that very same voice to talk targets sentences; and second, by accumulating knowledge from an individual’s voice by means of public movies posted on social media.

That is in line with Beenu Arora, CEO and chairman of Cyble, a cybersecurity firm that makes use of AI-driven options to cease dangerous actors.

“So that you’re principally speaking to them, assuming there’s somebody making an attempt to have a dialog, or … a telemarketing name, for instance. The intention is to get the precise knowledge by means of your voice that they’ll go and mimic … by means of another AI mannequin,” Arora defined. “And it is changing into way more noticeable now.”

He added that “extracting audio from video” on social media “is not that tough.”

“The longer you speak, the extra knowledge they accumulate,” Arora stated of the scammers, “and likewise much less voice modulations and your jargon and the way in which you communicate. However … if you come to those ghost calls or idle calls, I truly suggest not speaking a lot.

The Nationwide Institutes of Well being (NIH) recommends that victims of crime watch out for ransom calls from unfamiliar space codes or numbers that aren’t on the sufferer’s cellphone. These dangerous actors additionally go to “nice lengths” to maintain victims on the cellphone so they do not have a chance to contact the authorities. In addition they typically require the ransom cash to be despatched by way of a wire switch service.

The company additionally recommends that folks focused by this crime attempt to decelerate the state of affairs, ask to talk to the sufferer instantly, ask for an outline of the sufferer, hear fastidiously to the sufferer’s voice, and attempt to contact the sufferer individually by way of name, textual content or direct message.

The NIH launched a public service announcement saying that a number of NIH staff have fallen sufferer to such a rip-off, which “normally begins with a cellphone name {that a} member of your loved ones is being held captive.” Scammers have beforehand claimed {that a} sufferer’s member of the family has been kidnapped, typically with the sound of screams within the background of a cellphone name or video message.

Extra just lately, nonetheless, as a result of advances in AI, fraudsters can copy a beloved one’s voice utilizing social media movies to make a kidnapping plot extra actual. In different phrases, dangerous actors can use AI to repeat the voice of a beloved one utilizing publicly obtainable movies on-line to trick their targets into believing that their members of the family or pals have been kidnapped.

Callers usually present the sufferer with info on methods to ship cash in alternate for the protected return of their beloved one, however consultants counsel victims of this crime take a second to cease and contemplate whether or not what they’re listening to on the opposite line is actual , even in a second of panic.

“My humble recommendation … is that if you change into so good[s] of disturbing messages or somebody making an attempt to stress you or do issues [with a sense] in an emergency, it’s at all times higher to cease and suppose earlier than you go,” Arora stated. “I believe as a society it is going to change into way more difficult to determine actual versus pretend as a result of simply the progress we’re seeing.”

Arora added that whereas there are some AI-driven instruments getting used to proactively determine and fight AI fraud, because of the fast tempo of this growing expertise, there’s a lengthy approach to go for these working to thwart dangerous actors to benefit from unsuspecting victims. Anybody who believes they’ve fallen sufferer to such a rip-off ought to contact their native police division.